In Jevons we trust?

In the wake of DeepSeek’s “Sputnik Moment”, the AI-driven thesis that hypercharged recent nuclear development faces a stern challenge

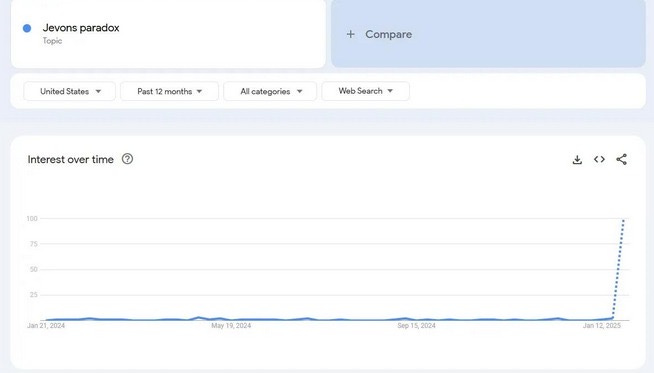

Jevons' Paradox states that when a resource becomes cheaper or more efficient to use, its overall demand often increases rather than decreases. If you’re in the energy world, you probably already heard of it. Well this was the week that everybody else in the world caught up thanks to a Chinese AI model called “DeepSeek-R1.”

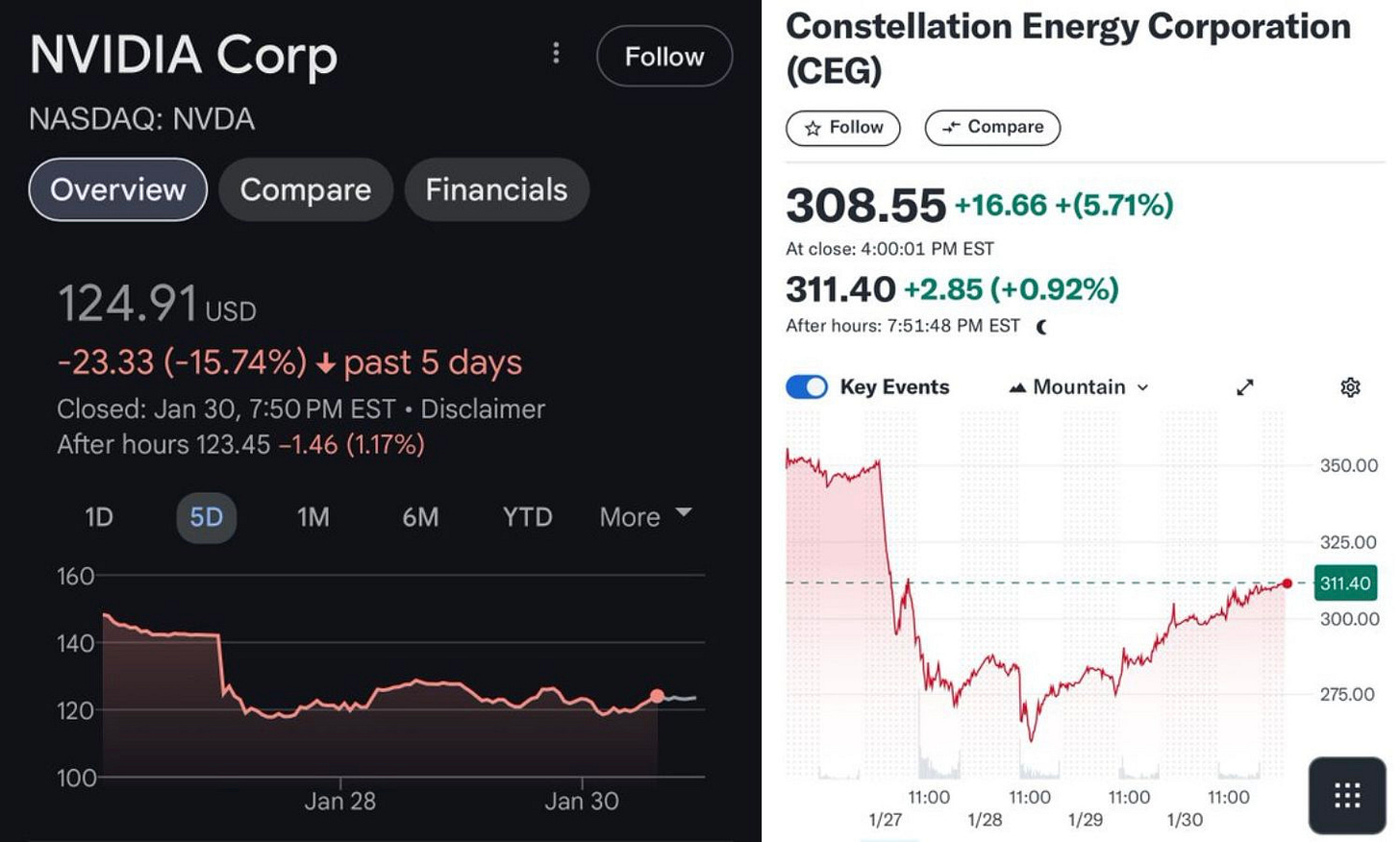

To cut a long story short, DeepSeek-R1 took the world by storm by matching or even outperforming top western models by certain metrics. The fact that it did this on a fraction of the compute and at 3% of the price of OpenAI’s flagship model was such a deep shock it caused the stock price of Nvidia, maker of the world’s best hardware for training AI, to crash by 17% in one day.

Constellation Energy and other nuclear-themed stock dropped in lockstep, although both have since also stabilized.

Predictive vs. Reasoning

How did DeepSeek-R1 make such a significant leap in efficiency? The big difference is in the model’s architecture — how it is structured to process information. Think of it like an electricity system that meets the same demand with fewer generators by optimizing the grid for efficiency.

Traditional predictive AI models like ChatGPT have been described as “spicy autocomplete” because it generates responses based on statistical probabilities derived from massive amounts of training data — it predicts the most likely next word, sentence or idea based on learned patterns.

Reasoning models on the other hand, take more structured steps. This allows them to tackle complex problems and arrive at sophisticated answers without relying as heavily on massive datasets.

This new generation of reasoning models (including OpenAI’s own o1 and o3-mini) are faster and cheaper to train in addition to being better at complex problem-solving. This upended the conventional wisdom that the winner of the AI race is simply going to be the company that manages to shovel the biggest amount of compute into its models. But if AI is getting cheaper to train, does that mean the massive electricity demand we’ve been projecting is overblown?

Enter Jevons

Necessity is the mother of invention. DeepSeek managed to be very efficient because it was starved for compute due to the US ban on high-end chips sold to China. But now the algorithmic cat is out of the bag.

If you believe in Mr Jevons, the fact that it just became more efficient to train AI just mean we are going to end up using more of it as cost comes down. This is to say, as soon as all the players start adapting the newer, more effective training approach, we are back to the game of more compute wins. If that is the case, the “more AI needs more chips needs more power” logic still holds, a good thing for nuclear development.

This is obviously what OpenAI and friends are banking on with the $500 billion “Stargate” super project to build out more data centers and the energy infrastructure that supports them.

As long as there is no evidence that scaling is hitting a wall, more is still more, and hyperscalers are going to hyperscale.

Cheaper training, more expensive inference?

Even if Jevons Paradox does not hold and the cost of training AI becomes permanently lower, this does not necessarily mean AI is going to need less juice overall. This is because while the new Reasoning models are cheaper to train, they are more expensive to run.

This is because reasoning models often need more computational resources during inference to perform complex tasks. Every time there is a new query, reasoning models have to do more computation on the fly, making them power-intensive over time. Data centers will still need stable, high-output power sources, and nuclear remains one of the few viable options for scaling that supply sustainably. However, the energy demand pattern might shift.

Instead of mega-clusters of power-hungry data centers dedicated to training, the real AI-related power demand of the future might be happening wherever the models are being run…cloud services, enterprise data centers, and even the palm of your hand if edge-computing devices becomes a reality.

THE ELEMENTAL TAKE

The story that AI will depend on endless energy helped propel nuclear back into the spotlight. That story is still alive, although it is not as strong as it was a week ago. To my mind, that’s OK.

We still need more nuclear energy. Still we need it for AI, but also electrification, deep decarbonization and a national security. Beyond the AI hype, the case for nuclear is timeless. However, the industry might do well to drop a little bit closer to the fundamental case of the technology. The seemingly endless spigot of tech money rushing into nuclear just because it is connected to the biggest topic in town might not flow forever.

If the majority of AI demand end up coming from inference, not training, that demand is going to be less centralized than we think. Instead of being coupled behind the meter to ginormous data centers, nuclear power plants will perform their traditional role: as the backbone of a strong and healthy grid with robust demand coming from AI usage, which is only set to increase in the future.

The question then becomes: when does the world become saturated with AI? And will we even able to recognize it by the time it is done? At that point “what is the likely demand for nuclear new-build projects” might be the least of our concerns.

Interesting for me to learn that AI-driven electricity demand could become more distributed. I much prefer that future, because the most disadvantaged benefit the most from having a robust grid. It feels dystopic to build nuclear power plants only to cordon them off from the people.

This is one of the only decent takes I have seen on this whole DeepSeek thing. My goodness the world has just become so reactionary. Thanks!